How to Build & Host a Full-Stack App on the Edge for Free (Cloudflare Pages + Workers)

Myth: free hosting is only for static sites

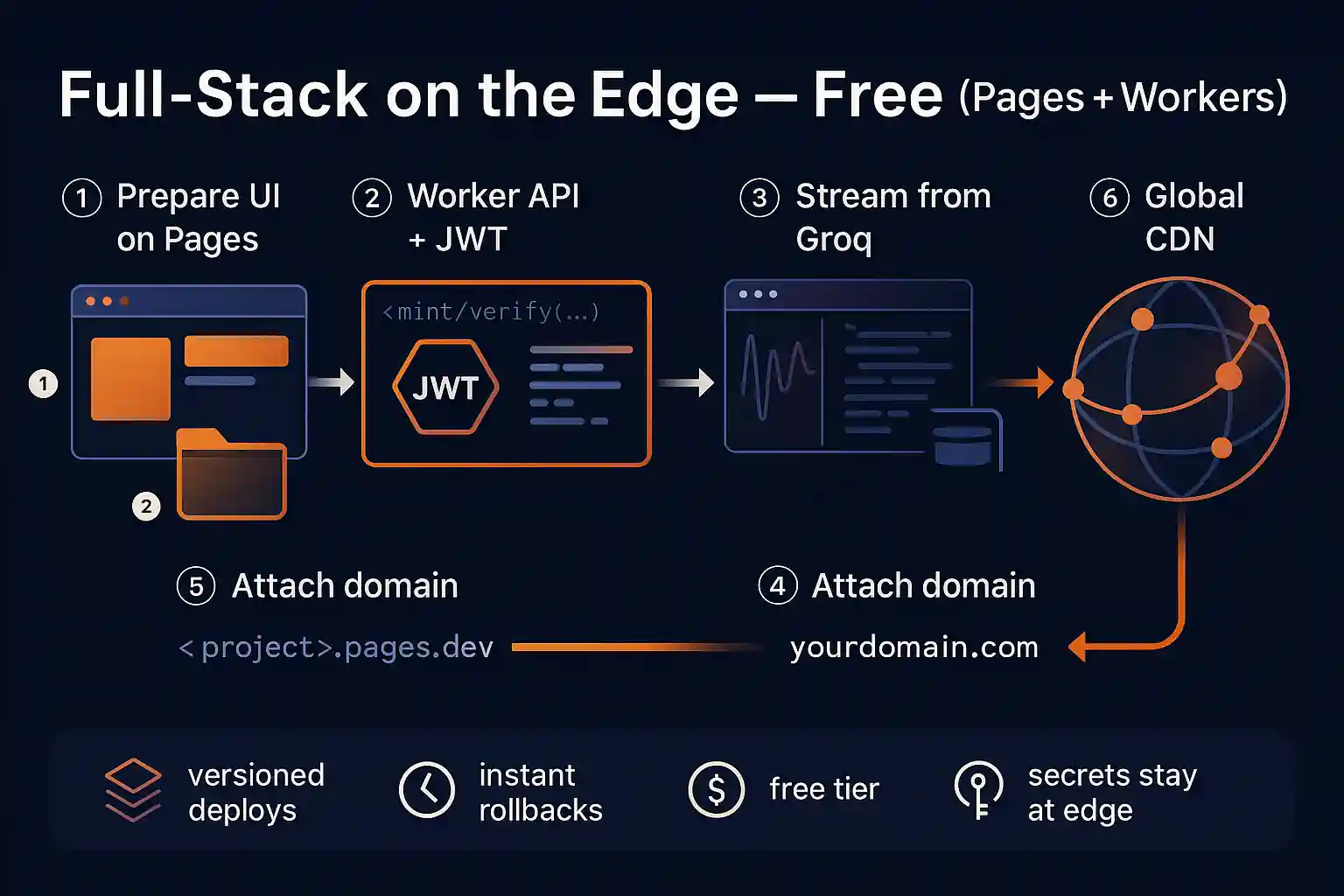

The old rule of thumb—“free” means static only—doesn’t hold anymore. With Cloudflare, you can run a fully capable app at the edge: Pages serves your UI from a global CDN, while Workers runs your backend logic close to users. Secrets live on the serverless edge, not in the browser bundle, and you still deploy in minutes.

Architecture in one sentence

Static-first UI on Pages; API on a Worker; optional Pages Functions for server-side routes baked into a Pages project, plus state via Durable Objects or D1 when you need it. Pages gives you instant HTTPS and versioned deploys, while Workers enforces auth, rate limits, and outbound calls.

The zero-cost blueprint

- Ship the UI on Pages. Upload prebuilt assets with Direct Upload or connect a Git repo for CI. You get a

<project>.pages.devURL with automatic TLS and rollbacks. Docs - Add a Worker for your API. Create a Worker and expose routes like

/chator/api/*. Bind environment variables for secrets (e.g.,GROQ_API_KEY,JWT_SECRET) and verify a short-lived JWT on every call. Limits - Stream model outputs via Groq. Call Groq’s OpenAI-compatible endpoint and stream tokens to the browser; the UI renders partial output for a real-time feel. OpenAI-compat · Streaming · Llama-3.3-70B

- Gate usage. Start simple (per-visitor caps in

localStorage), then move to server-side limits with Durable Objects or D1 for persistent quotas. DO rate-limiter example · D1 limits - Attach your domain. Add a custom domain to your Pages project and 301 the staging host to your brand. Direct Upload

Security & ergonomics

- JWTs, not raw keys. Issue short-lived tokens server-side and validate on every Worker request. Scope CORS so only your Pages origin can call your API.

- Secrets stay at the edge. Store provider keys as Worker environment variables; never ship them to the client; rotate as needed.

- Cache where it counts. Remember that Workers execute before cache; send only dynamic calls through the Worker and cache GETs aggressively to reduce invocations.

Pages Functions vs. a standalone Worker

Pages Functions are great when your routes live alongside the UI, but dashboard drag-and-drop does not compile a functions/ folder. Use Wrangler to deploy Functions, or stick to a separate Worker if you prefer a clean UI/API split.

Free-plan realities

On the Workers Free plan, you get up to 100,000 requests per day per account, resetting at midnight UTC. Pages gives generous deploy and bandwidth characteristics for small sites. D1 and DOs include practical free limits for metering and basic state—upgrade when you outgrow them.

Step-by-step: minimal chat app

- Frontend (Pages): a simple HTML/CSS/JS chat UI that connects to

/chatand renders streamed text. - Backend (Worker): validates a JWT, calls Groq’s

/openai/v1/chat/completionsendpoint, and forwards the event stream to the client. - Guardrails: per-IP/session limiter (DO or D1), and short cache TTLs for read endpoints.

Why this pattern feels “unfairly good”

You get a premium user experience with tiny infrastructure: a static UI that never waits on origin, a Worker that wakes for the hard parts, and a clear on-ramp from free to paid when usage justifies it. Most importantly, you keep velocity—deploy fast, iterate faster, and scale cleanly.

References

- Original guide: Build & Host a Full-Stack App on the Edge for Free

- Full guide on how to add a rate-limiter to your app's backend

- Cloudflare Pages — Overview

- Pages — Direct Upload

- Pages Functions — Docs

- Pages Functions — Get Started (Wrangler deploy)

- Workers — Limits (Free plan daily request limit)

- Wrangler — Commands

- Durable Objects — Build a Rate Limiter

- Cloudflare D1 — Limits

- Groq — OpenAI Compatibility

- Groq — Text & Chat (Streaming)

- Groq — Llama-3.3-70B Versatile (128k)

- Groq — Rate Limits